One-vs-all:

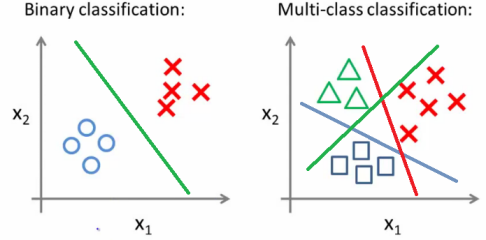

We've used the Logistic regression to do Binary Classification as shown on the left. We can also use the same Logistic regression to do Multi-class classification.

The Idea, how to do it?

Multi-class classification can be done by taking one class at a time and merge all other into a single other class, and then do the same as we did in Binary Classification.

The code:

The complete code can be found here:https://github.com/geekRishabhjain/MLpersonalLibrary/tree/master/RlearnPy

| def oneVsAll(self, X, y, num_labels, Lambda): | |

| m = X.shape[0] | |

| n = X.shape[1] | |

| all_theta = [] # 10*2 size | |

| all_J = [] | |

| X = np.hstack((np.ones((m, 1)), X)) | |

| initial_theta = np.zeros((n + 1, 1)) | |

| for c in range(1, num_labels+1): | |

| theta, J_his = self.gradientDescentReg(X, np.where(y == c, 1, 0), initial_theta, 1, 300, Lambda) | |

| all_theta.extend(theta.T) | |

| all_J.extend(J_his) | |

| return np.array(all_theta).reshape(num_labels, n + 1), all_J |

This function returns the theta parameters for all the classification lines in Multi-class classification. First, we add a ones column to x. 'num_labels' is the number of classes to be classified. In the above image, it will be three. We iterate three times. We gradient descent to X and a matrix created using numpy.where function, this find all the y's where y's value is equal to the class number.

The gradient descent is the same as used in binary classification. While implementing gradient descent you can use either the normal costFunction for logistic regression, or use a regularized costFunction, for which the parameter Lambda had been passed in oneVsAll method.

Regularized costFunction is just a simple extension of simple costFunction, all it does is make sure that our prediction is more practical. It will be discussed in a later post.

The predict function is also the same as in binary classification:

| def predictOneVsAll(self, all_theta, X): | |

| m = X.shape[0] | |

| num_labels = all_theta.shape[0] | |

| p = np.zeros((m, 1)) | |

| X = np.hstack((p, X)) | |

| predictions = X @ all_theta.T | |

| return np.argmax(predictions,axis=1)+1 |

No comments:

Post a Comment